Integrating Copilot Agents with Office Add-Ins: What Developers Need to Know

Microsoft Build 2025 delivered what is arguably the most significant shift in the Office add-in development model since the introduction of the web add-in platform itself: add-ins can now be bundled with Copilot agents, allowing a single unified package to deliver both traditional task pane functionality and conversational AI capabilities. For developers and organisations that have invested in custom Office add-ins, this is not just a new feature – it is a fundamental change in how users will discover, interact with, and derive value from your extensions.

At McKenna Consultants, we have been building Microsoft Office add-ins for enterprise clients for over a decade, from the early days of COM and VSTO through to the modern web-based platform. We have been working with the unified manifest and Copilot integration capabilities since they became available in preview. This article explains what the integration means, how it works technically, and how to plan an add-in that works effectively through both the traditional task pane and through Copilot chat.

What Changed at Build 2025

Prior to Build 2025, Office add-ins and Copilot agents existed as separate entities. An add-in provided a task pane, custom functions, or event-based activations within an Office application. A Copilot agent (or plugin) extended Microsoft 365 Copilot with custom skills and data access. If you wanted both, you built and deployed two separate things.

The Build 2025 announcement unified these models. A single app package, defined by a unified manifest, can now declare both add-in capabilities (task panes, custom functions, event handlers) and Copilot agent capabilities (skills, conversation handlers, data connections). When a user installs the app, they get both the traditional add-in experience and the ability to interact with its capabilities through natural language in Copilot.

This matters for three reasons:

- Single deployment and management. IT administrators deploy one package instead of two. Users install one app instead of two. There is a single identity, single permission model, and single update channel.

- Copilot as a new interaction surface. Users can now invoke add-in functionality by asking Copilot to do something in natural language, rather than navigating to a task pane and clicking through a UI. This lowers the barrier to using your add-in’s capabilities.

- Contextual intelligence. Copilot can invoke your add-in’s capabilities contextually – for example, when a user is drafting an email and Copilot determines that one of your add-in’s skills is relevant to what they are trying to accomplish.

The Unified Manifest

The unified manifest is the technical foundation of Office add-in Copilot agent integration. It replaces the legacy XML manifest (manifest.xml) with a JSON-based manifest that aligns with the Teams app manifest schema, extended with properties for Office add-in and Copilot agent capabilities.

Manifest Structure

A unified manifest for an app with both add-in and Copilot capabilities includes the following key sections:

$schema and manifestVersion – Identifies the manifest schema version. For Copilot integration, you need manifest version 1.17 or later.

id – A unique identifier (GUID) for the app. This single ID is used across all surfaces – Teams, Office, Copilot.

extensions – This is where the Office add-in capabilities are declared. It contains objects defining task panes, custom functions, event-based activations, and their associated runtime configurations.

copilotAgents – The new section introduced for Copilot integration. This declares the agent’s skills, which are the specific capabilities that Copilot can invoke. Each skill maps to a function or API endpoint that your app exposes.

authorization – Defines the permissions your app requires, covering both the add-in’s needs (e.g., read/write access to the mailbox) and the Copilot agent’s needs (e.g., access to Microsoft Graph resources).

Here is a simplified example showing the coexistence of add-in and Copilot declarations:

{

"$schema": "https://developer.microsoft.com/json-schemas/teams/v1.17/MicrosoftTeams.schema.json",

"manifestVersion": "1.17",

"id": "00000000-0000-0000-0000-000000000000",

"name": { "short": "Contract Reviewer" },

"description": {

"short": "Review and analyse contracts",

"full": "Reviews contracts for key clauses, risks, and compliance requirements."

},

"extensions": [

{

"requirements": { "capabilities": [{ "name": "Mailbox", "minVersion": "1.13" }] },

"runtimes": [

{

"id": "TaskPaneRuntime",

"type": "general",

"code": { "page": "https://contoso.com/taskpane.html" }

}

],

"ribbons": [

{

"contexts": ["mailRead"],

"tabs": [

{

"id": "ReviewTab",

"groups": [

{

"id": "ReviewGroup",

"controls": [

{

"id": "OpenTaskPane",

"type": "button",

"label": "Review Contract",

"icons": [{ "size": 16, "url": "https://contoso.com/icon-16.png" }],

"action": { "type": "openPage", "view": "TaskPaneRuntime" }

}

]

}

]

}

]

}

]

}

],

"copilotAgents": {

"declarativeAgents": [

{

"id": "contractReviewer",

"name": "Contract Reviewer",

"description": "Analyses contracts for key clauses, risks, and compliance issues",

"instructions": "You are a contract review assistant. When asked to review a contract, extract key clauses, identify potential risks, and flag compliance concerns.",

"capabilities": [

{

"name": "GraphConnectors",

"connections": [{ "connection_id": "contoso-contracts" }]

}

],

"actions": [

{

"id": "analyseContract",

"file": "api-plugin.json"

}

]

}

]

}

}

Migration from XML Manifest

If you have an existing Office add-in using the legacy XML manifest, migration to the unified manifest is a prerequisite for Copilot integration. The migration involves:

- Converting manifest structure. The XML elements map to JSON properties, but the structure differs significantly. Microsoft provides tooling in the Teams Toolkit for Visual Studio Code that can assist with this conversion.

- Updating runtime declarations. The unified manifest uses a different model for declaring runtimes (the execution contexts for your code). Task pane runtimes, function runtimes, and event-based runtimes are declared explicitly.

- Testing across surfaces. The unified manifest deploys your app across Teams, Outlook, and other Microsoft 365 surfaces. You need to verify that your add-in works correctly in each surface, as there are subtle differences in API availability and behaviour.

- Permission model alignment. The unified manifest uses a centralised permission model. Review your existing permissions and ensure they are correctly declared in the new format.

McKenna Consultants has guided multiple enterprise clients through this migration. The technical conversion is typically straightforward, but the testing and validation across surfaces requires systematic effort.

How Copilot Invokes Add-In Capabilities

The mechanism by which Copilot invokes your add-in’s capabilities is one of the most important aspects of the integration to understand, as it directly affects how you design your agent’s skills.

The Invocation Flow

- User prompt. The user types a natural language request in Copilot – for example, “Review the contract attached to this email for payment terms and liability clauses.”

- Intent matching. Copilot’s orchestration layer evaluates the user’s intent against the available skills from all installed agents. It uses the agent’s name, description, and skill descriptions to determine relevance.

- Skill selection. Copilot selects the most relevant skill and prepares to invoke it. It extracts parameters from the user’s prompt based on the skill’s parameter schema.

- Confirmation (optional). Depending on the skill’s configuration and the organisation’s policies, Copilot may ask the user to confirm before invoking the skill – particularly for skills that modify data.

- Execution. Copilot invokes the skill, which executes your code. This code can use the office.js APIs to interact with the current document, email, or spreadsheet, and can call external APIs for additional data or processing.

- Response. Your skill returns a result to Copilot, which formats it into a natural language response for the user. This response can include adaptive cards for rich formatting.

Designing Effective Skills

The quality of the Copilot experience depends heavily on how well you design your agent’s skills. Key principles:

Clear, specific descriptions. Copilot uses your skill descriptions to determine when to invoke them. Vague descriptions lead to missed invocations (Copilot does not realise your skill is relevant) or false invocations (Copilot invokes your skill for unrelated requests). Write descriptions that are specific about what the skill does, what inputs it expects, and what outputs it produces.

Granular skills over monolithic skills. Rather than creating a single skill that “does everything your add-in can do,” create multiple focused skills that each handle a specific task. This improves Copilot’s ability to select the right skill and improves the quality of parameter extraction.

Parameterised inputs. Define clear parameter schemas for your skills. Copilot extracts parameters from the user’s natural language prompt and passes them to your skill. Well-defined parameters with descriptions, types, and examples help Copilot extract the right values.

Structured responses. Return structured data from your skills rather than free-form text. Copilot can format structured data into clear, well-organised responses. Include both a summary for Copilot to narrate and detailed data for adaptive card rendering.

Impact on Add-In UX Design

The introduction of Copilot as an interaction surface has significant implications for how you design your add-in’s user experience. The same capabilities now need to work through two fundamentally different interaction models: a visual task pane with buttons and forms, and a conversational chat interface.

Task Pane Design in the Copilot Era

The task pane remains the primary interface for complex, multi-step workflows where the user needs to see and interact with rich UI elements – forms, data tables, visualisations, configuration panels. Copilot integration does not eliminate the need for a well-designed task pane.

However, the task pane’s role shifts. Instead of being the only way to access your add-in’s capabilities, it becomes the interface for power users and complex scenarios. Casual or one-off interactions migrate to Copilot. This means:

- The task pane can focus on depth. Since simple tasks are handled through Copilot, the task pane can be designed for complex workflows without compromising on simplicity for basic tasks.

- Deep-linking from Copilot. When a Copilot interaction leads to a task that requires the task pane (e.g., “I’ve found three issues in this contract – would you like to review them in detail?”), the agent can open the task pane to a specific view with relevant context pre-loaded.

- Consistent state. Actions taken through Copilot and actions taken through the task pane should reflect the same underlying state. If the user reviews a contract clause through Copilot and then opens the task pane, the task pane should show that the clause has been reviewed.

Designing for Conversational Interaction

Designing for Copilot requires thinking about your add-in’s capabilities as tasks that can be expressed in natural language. This is a different design discipline from traditional UI design:

Task-oriented thinking. What are the discrete tasks your users perform? Each task is a potential Copilot skill. Frame them in terms of user intent: “Check this document for compliance issues,” “Insert the standard NDA clause,” “Summarise the key dates in this contract.”

Progressive disclosure through conversation. In a task pane, you use UI hierarchy (tabs, sections, accordions) for progressive disclosure. In Copilot, you use follow-up questions and multi-turn conversation. Design your skills to return actionable results with clear follow-up options.

Error handling in natural language. When something goes wrong in a task pane, you show an error message. In Copilot, your skill needs to return an error that Copilot can explain in natural language. “I could not access the contract because the attachment is not a supported file type. I can review Word and PDF documents” is more useful than a generic error code.

Planning Your Add-In for Dual-Mode Operation

For organisations building new add-ins or upgrading existing ones, here is our recommended approach to planning for both task pane and Copilot operation.

Step 1: Capability Audit

List every capability your add-in provides. For each capability, classify it:

- Copilot-primary: Simple, well-defined tasks that are natural to express in language. Examples: “Look up this client’s contract terms,” “Insert a compliance disclaimer.” These should be implemented as Copilot skills and may not need task pane equivalents.

- Dual-mode: Capabilities that work in both modes. Examples: “Summarise this document” (Copilot returns a text summary; task pane shows a structured summary with highlighting). These need both implementations.

- Task pane-primary: Complex, visual, or multi-step workflows that require rich UI. Examples: side-by-side document comparison, spreadsheet data validation with a results table, configuration panels. These remain task pane features and may be linked from Copilot responses.

Step 2: Shared Business Logic

Architect your code so that business logic is shared between the task pane and Copilot code paths. Both should call the same underlying functions for data retrieval, processing, and document manipulation. The difference should be only in the input (UI events vs Copilot skill invocation) and the output (DOM updates vs skill response).

This shared architecture ensures consistent behaviour regardless of how the user triggers a capability, and reduces maintenance burden.

Step 3: Unified Manifest Development

Build the unified manifest iteratively. Start with your existing add-in capabilities declared in the extensions section. Then add Copilot skills one at a time in the copilotAgents section, testing each skill’s invocation, parameter extraction, and response quality.

Microsoft’s Teams Toolkit for Visual Studio Code provides excellent tooling for unified manifest development, including local debugging for both add-in and Copilot scenarios.

Step 4: Testing Strategy

Testing dual-mode add-ins requires two distinct testing approaches:

Task pane testing follows familiar patterns: UI testing with frameworks like Playwright or Cypress, API mocking, and cross-browser verification. This is established practice.

Copilot skill testing is newer and requires testing:

- Intent matching: Does Copilot invoke your skill for the prompts you expect? Does it avoid invoking your skill for unrelated prompts?

- Parameter extraction: Does Copilot correctly extract parameters from varied natural language expressions?

- Response quality: Does the skill response render well when Copilot formats it?

- Edge cases: How does the skill handle missing parameters, ambiguous requests, or errors?

Build a test matrix of natural language prompts and expected outcomes, and run it regularly as you iterate on skill descriptions and parameters.

What This Means for Custom Outlook Add-In Development

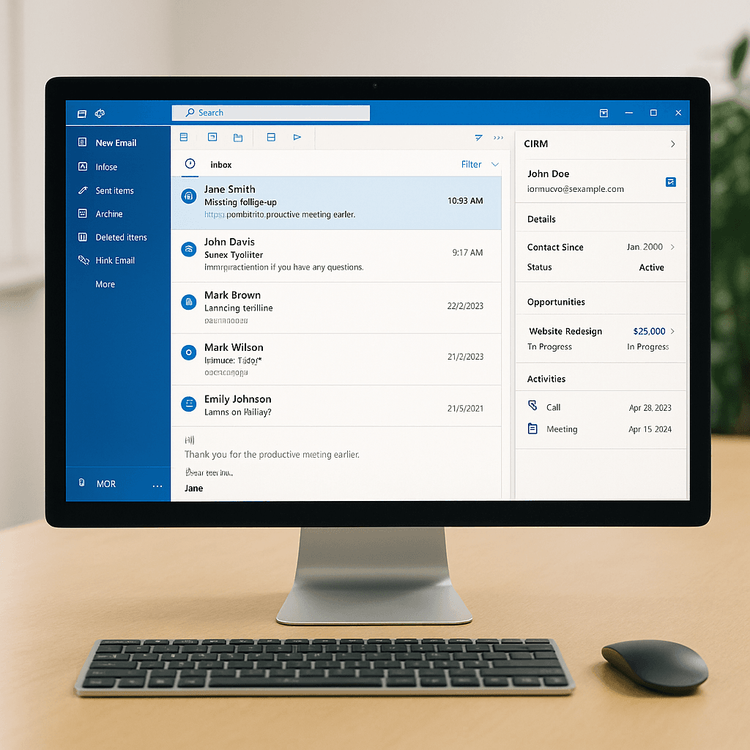

The Copilot integration is particularly impactful for Outlook add-ins, where the conversational paradigm aligns naturally with email-centric workflows. Consider these scenarios:

- Email triage: “Summarise the key action items from my unread emails this morning” – the agent scans emails, extracts action items using your business logic, and presents a prioritised summary.

- Template insertion: “Draft a response to this client using our standard project update template” – the agent retrieves the template, populates it with context from the email thread, and inserts it into the reply.

- Data lookup: “What is the contract renewal date for Acme Ltd?” – the agent queries your CRM or document management system and returns the answer directly in chat.

These are tasks that previously required the user to open the add-in’s task pane, navigate to the right function, and manually input parameters. With Copilot integration, they become single-sentence natural language requests.

For organisations in the UK investing in custom Outlook add-in development, building with Copilot integration from the start is strongly recommended. The incremental development effort is modest compared to retrofitting it later, and the user experience improvement is substantial.

Practical Considerations and Limitations

As with any new platform capability, there are practical considerations to be aware of:

Copilot licence requirements. Users need a Microsoft 365 Copilot licence to access the Copilot agent capabilities of your app. The add-in capabilities remain available without Copilot. Design your app so that the add-in is fully functional standalone, with Copilot providing an enhanced experience for licensed users.

Organisational rollout. IT administrators control which Copilot agents are available in their tenant. Your unified app needs to pass the organisation’s review process for both the add-in and agent capabilities.

Skill invocation latency. Copilot skill invocations involve an LLM inference step (for intent matching and parameter extraction) in addition to your skill’s execution time. Total response times are typically 2-5 seconds, which is acceptable for conversational interaction but slower than a direct button click in a task pane. Design your skills accordingly – do not move latency-sensitive interactions to Copilot.

Evolving platform. The Copilot agent platform is evolving rapidly. APIs, manifest schemas, and capabilities are being updated frequently. Build with extensibility in mind, and plan for regular updates to your manifest and agent configuration.

Conclusion

The integration of Copilot agents with Office add-ins represents a paradigm shift in how enterprise users interact with custom functionality in Microsoft 365. The unified manifest provides a clean technical foundation for delivering both visual and conversational experiences from a single app package. For developers, the key challenge is not the technical integration itself – which is well-documented and well-tooled – but the design thinking required to create capabilities that work effectively across both interaction models.

McKenna Consultants is at the forefront of Microsoft Copilot custom agent development in the UK. We combine deep expertise in Office add-in development with practical experience in AI agent architecture to help organisations build add-ins that are ready for the Copilot era. Whether you are building a new add-in, migrating from the legacy manifest, or adding Copilot capabilities to an existing add-in, contact our team to discuss your requirements.